Introduction

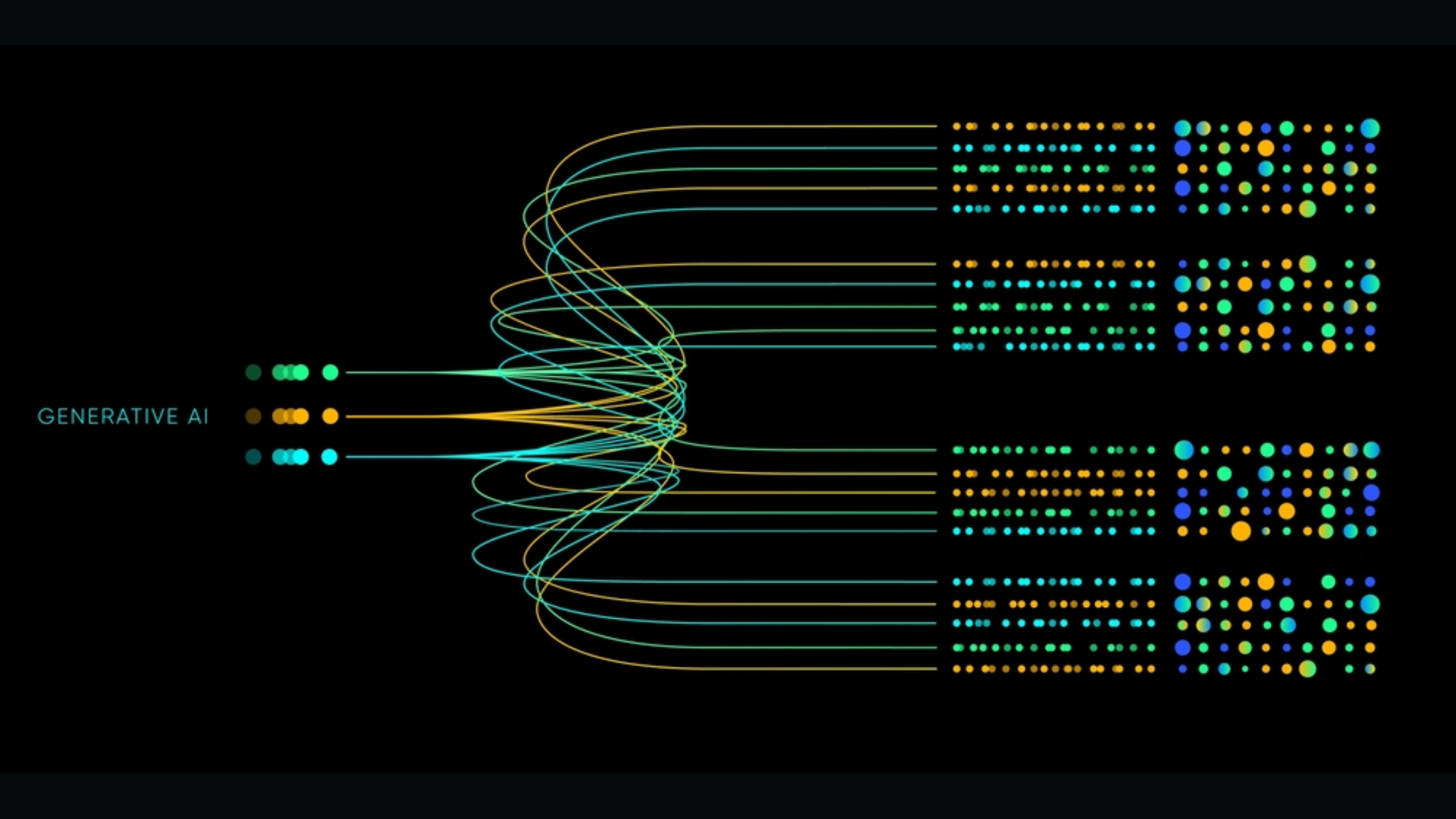

Large Language Models (LLMs) have gained widespread recognition for their pivotal role in popularizing generative artificial intelligence and driving its integration into various aspects of everyday life. These models have captured attention due to their ability to understand and generate human-like text, sparking interest and investment from organizations eager to harness AI’s potential across diverse business functions and applications.

This blog will delve into:

- The Meaning of a Large Language Model

- Components of a Large Language Model

- How do LLMs work?

- Use Cases and Popular Examples of Large Language Models

What is a large language model?

A Large Language Model (LLM) is an advanced artificial intelligence system designed to process and understand human language at scale. These models are trained on vast amounts of text data, enabling them to generate coherent and contextually relevant text based on user input prompts.

Imagine you’re using a virtual assistant named “Tour” to help you plan a trip. You ask Tour, “What are some popular attractions in Paris?” In response, Tour utilizes its vast knowledge of language patterns and context, drawing from its training data. It generates a detailed list of popular tourist destinations in Paris, complete with descriptions, ratings, and recommendations based on your preferences. This ability to understand your query and generate relevant text in natural language is a hallmark of Large Language Models.

Gartner defines a large language model as “a specialized type of artificial intelligence that has been trained on vast amounts of text to understand existing content and generate original content.”

As per research, in 2022, the Large Language Model (LLM) Market was valued at 10.5 billion USD. It is projected to reach 40.8 billion USD by 2029, with a compound annual growth rate (CAGR) of 21.4% from 2023 to 2029.

Some more key statistics to note:

According to Gartner’s projection, by 2026, half of all office workers in Fortune 100 companies will be using some form of AI augmentation.

McKinsey reports that implementing Large Language Models (LLMs) in customer operations could lead to a productivity increase of up to 45% compared to current function costs.

Having access to a Large Language Model could enable about 15% of all worker tasks in the US to be completed notably faster while maintaining the same level of quality.

Components of LLMs

Large Language Models consist of several key components that work together to understand and generate human-like text. Here’s a breakdown of all of them:

Preprocessing – Before feeding text data into the model, it undergoes preprocessing. This step involves tokenization, where the text is divided into smaller units such as words or subwords. Additionally, text may be lowercase, punctuation removed, and special characters normalized to prepare it for modeling.

Architecture – LLMs are typically based on deep learning architectures, with Transformer architecture being one of the most common. The Transformer model consists of an encoder-decoder architecture, where the encoder processes input text and the decoder generates output text. Within each encoder and decoder layer, there are sublayers such as self-attention mechanisms and feedforward neural networks.

Self-Attention Mechanism – This mechanism allows the model to weigh the importance of different words in a sentence when processing it. It computes attention scores for each word based on its relationship with other words in the sentence. Self-attention enables the model to capture long-range dependencies and understand contextual relationships within the text.

Positional Encoding – Since LLMs do not inherently understand the sequential order of words in a sentence, positional encoding is added to provide information about the position of words within the input sequence. This allows the model to learn from the order of words in addition to their content.

Feedforward Neural Networks (FFNN): Each sublayer in the Transformer architecture contains a feedforward neural network. These networks apply linear transformations followed by non-linear activation functions to process the information captured by the self-attention mechanism. FFNNs help the model learn complex patterns and relationships within the text data.

Training Data – LLMs require large amounts of text data for training. This data is typically sourced from diverse sources such as books, articles, websites, and other textual sources. The model learns to predict the next word in a sequence based on the context provided by the preceding words.

Fine-Tuning – After pretraining on a large corpus of text data, LLMs can be fine-tuned on specific tasks or domains to improve performance. Fine-tuning involves updating the model’s parameters on a smaller dataset related to the target task, allowing it to adapt to the nuances of the task at hand.

Output Layer – The output layer of an LLM generates probabilities for the next word in a sequence based on the input context. This layer is typically a softmax function that produces a probability distribution over the vocabulary, allowing the model to select the most likely next word.

How do LLMs work?

A crucial aspect of how Large Language Models function lies in their approach to representing words. In the past, traditional machine learning methods relied on simple numerical tables to represent each word. However, this approach fell short of capturing intricate relationships between words, such as words with synonymous meanings or those with semantic connections. To address this limitation, LLMs employ multi-dimensional vectors, known as word embeddings, to represent words in a manner that preserves their contextual and semantic relationships.

By utilizing word embeddings, transformers within LLMs can preprocess text by converting it into numerical representations through an encoder. This encoder comprehends the context of words and phrases, recognizing similarities in meaning and other linguistic relationships, such as parts of speech. Subsequently, supported by this understanding, LLMs leverage a decoder to generate unique outputs by applying their acquired knowledge of the language.

Use cases and popular examples of large language models

Large Language Models have revolutionized various industries with their advanced natural language processing capabilities. Here are five popular use cases for LLMs along with examples of how they are applied:

Text Generation and Content Creation – LLMs are adept at generating human-like text. They are valuable for content creation tasks such as writing articles, generating product descriptions, or composing marketing copy. OpenAI’s GPT-3 has been used to generate blog posts, news articles, and even code snippets for software development

Language Translation – LLMs can translate text from one language to another with high accuracy, enabling seamless communication across different linguistic backgrounds. Google’s Transformer-based model, Google Translate, employs LLM techniques to provide translations between over 100 languages, facilitating global communication.

Sentiment Analysis – LLMs can analyze the sentiment of text data, helping businesses understand customer opinions, detect trends, and gauge public sentiment towards products or brands. BERT (Bidirectional Encoder Representations from Transformers) has been used for sentiment analysis in social media monitoring tools and customer feedback analysis platforms.

Chatbots and Virtual Assistants – LLMs power conversational agents, chatbots, and virtual assistants that can understand and respond to user queries in natural language, enhancing customer service and user engagement. OpenAI’s GPT-based chatbot, known as GPT-3 Chatbot, is capable of engaging in human-like conversations, providing assistance, answering questions, and even generating creative responses.

Text Summarization – LLMs can condense lengthy documents or articles into concise summaries, saving time and effort for information retrieval and comprehension. Facebook’s BART (Bidirectional and Auto-Regressive Transformers) model has been applied to text summarization tasks, producing accurate and coherent summaries of news articles, research papers, and other textual content.

Wrapping up

In conclusion, we can say that Large Language Models represent a transformative leap in artificial intelligence and enable profound advancements in natural language understanding and generation. As these models continue to evolve and proliferate, they promise to reshape the way we interact with technology, paving the way for more intuitive and efficient communication in our increasingly interconnected world.

For further exploration of Generative AI, Large Language Models, and Machine Learning, visit the Datacrew blog page.